MetaServer > Help > How to run the Azure AI Vision or Document Intelligence engine On-Premise

How to run the Azure AI Vision and Azure AI Document Intelligence engine On-Premise

The Azure Computer Vision engine, which MetaServer uses for the Extract Text (Azure AI Vision) rule, and the Azure AI Document Intelligence engine, which MetaServer uses for the Extract Text (Azure AI Document Intelligence), can be run on-premise using Containers through the Docker engine.

Running the engine on-premise can be useful for security and data governance requirements.

Local Azure AI Vision engine

Local Azure AI Document Intelligence engine (Setup)

1) An active Azure AI Vision or Azure AI Document Intelligence resource: you will need to have a valid key for an Azure AI Vision or Azure AI Document Intelligence resource. If you haven’t already applied for one, you can find instructions on our Extract Text (Azure AI Vision) online help page and Extract Text (Azure

AI Document Intelligence) online help page.

Once you have your key, please keep the following information on hand:

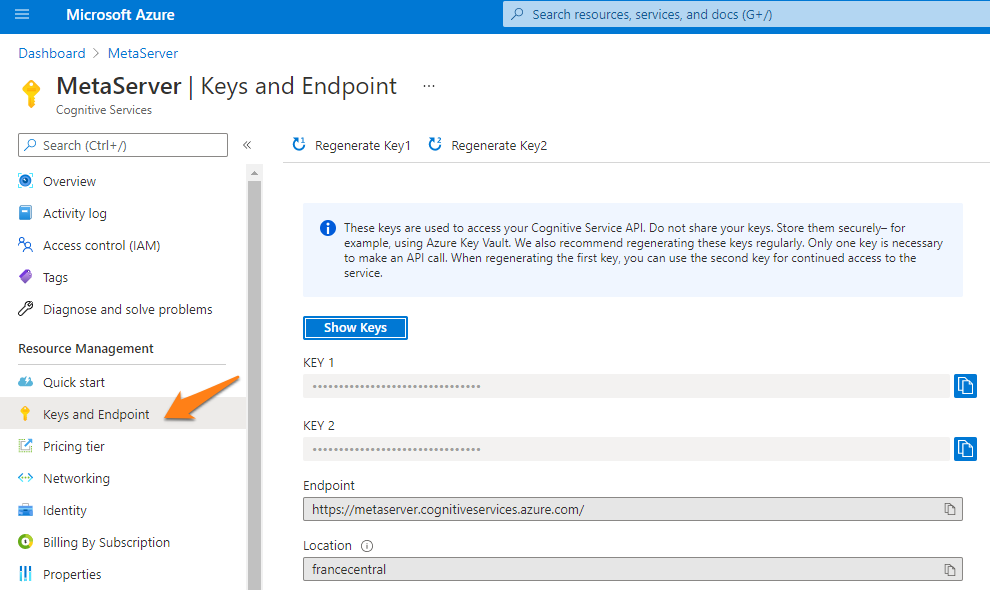

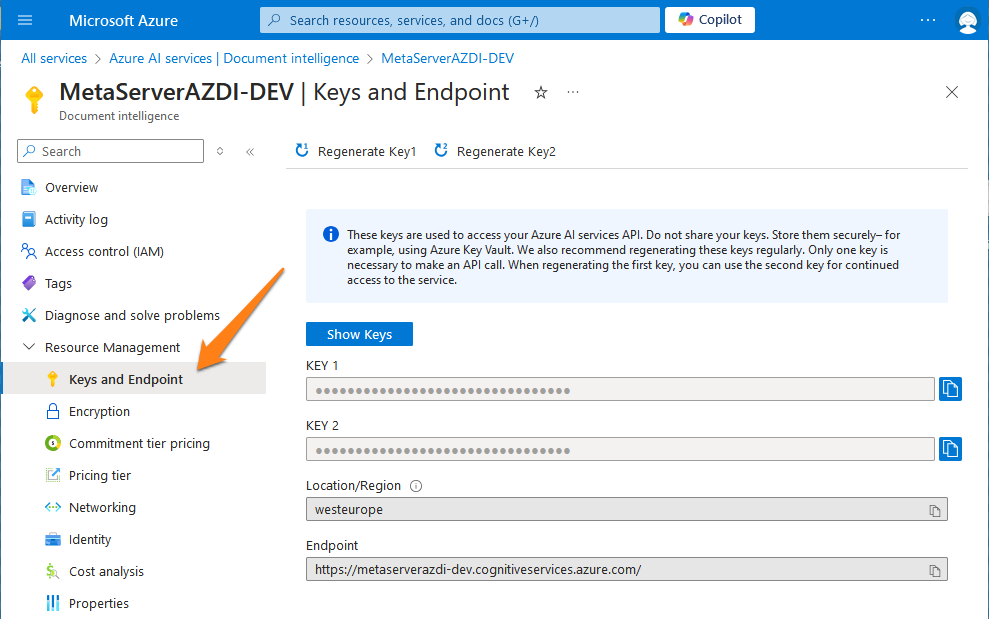

{API_KEY}: One of the two available resource keys on the Keys and Endpoint page.

{ENDPOINT_URI}: The endpoint as provided on the Keys and Endpoint page.

Azure AI Vision resource keys

Azure AI Document Intelligence resource keys

2) The Docker Engine on your host system: you need the Docker Engine installed on your host computer.

You can find instructions on how to install the Docker environment through Docker’s online documentation.

NOTE: In case your host computer shuts down and needs to be restarted, by default, you will need to log in and launch the Docker engine manually. To automatically launch the Docker engine at a system (re)start, enable the “Start Docker Desktop when you log in” option in your Docker client’s settings.

3) Approval from Microsoft to run the Azure Cognitive Services container on your system: you will need to apply for permission to run the container from Microsoft through the following online request form.

The form requests information about you, your company, and the user scenario for which you’ll use the container. After you submit the form, the Microsoft Azure Cognitive Services team will review it and email you with a decision within 10 business days.

IMPORTANT:

- On the form, you must use an email address associated with an Azure subscription ID.

- The Azure resource you use to run the container must have been created with the approved Azure subscription ID.

- Check your email (both inbox and junk folders) for updates on the status of your application from Microsoft.

After you’re approved, you will be able to run the container after downloading it from the Microsoft Container Registry (MCR). This will be described in more detail in the next step.

You won’t be able to run the container if your Azure subscription has not been approved.

After you’ve received permission from Microsoft to run the AZVI container on your system, you can install and run the container through your Windows Command Prompt.

IMPORTANT: make sure the logged-in user has the appropriate installation rights in order to proceed. If you don’t have permission, please contact your IT administrator to (temporarily) elevate your user rights.

NOTE: the {ENDPOINT_URI} and {API_KEY} variables should be replaced by your AZVI’s resource endpoint and key.

If you’re not sure where to find this information, please refer to the following online help.

Step 1: Enter one of the following commands in your Windows Command Prompt:

Command 1 (for AZVI model version 3.2):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 6 mcr.microsoft.com/azure-cognitive-services/vision/read:3.2-model-2022-04-30 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the Read OCR container from the general available AZVI 3.2 model’s container image. (If you want to use a”preview” version instead of the current general available version, replace

read-3.2with, for example,read-3.2-model-2022-01-30-preview) - Allocates 6 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 6 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 6) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

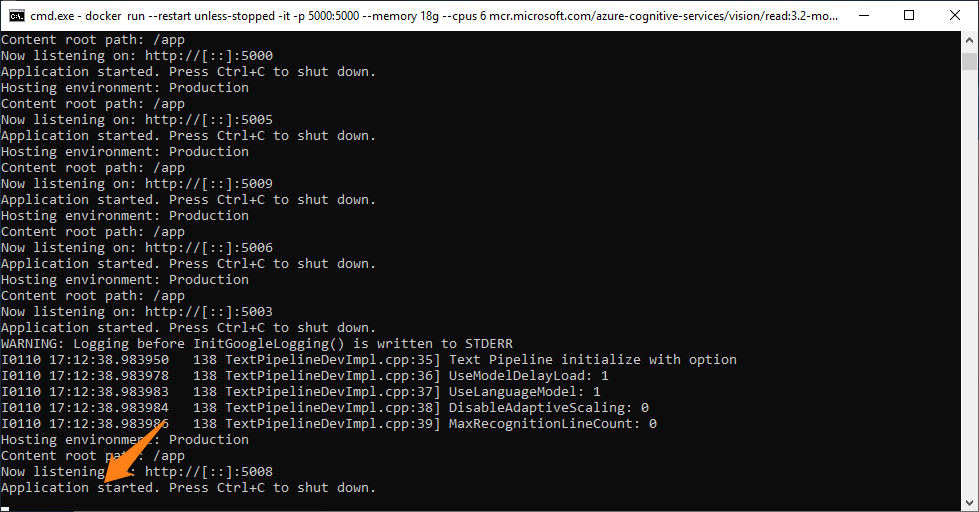

After you run the command, wait until it returns the confirmation message “Application started”:

Command 1 (for AZDI Invoice model version 3.0):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 8 mcr.microsoft.com/azure-cognitive-services/form-recognizer/invoice-3.0 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the general available Form Recognizer Invoice container, version 3.0. (If you want to use a “preview” version instead of the current general available version, replace

invoice-3.0with, for example,invoice-3.0-model-2023-10-31-preview) - Allocates 8 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 8 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 8) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

Command 2 (for AZDI READ model version 3.0):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 8 mcr.microsoft.com/azure-cognitive-services/form-recognizer/read-3.0 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the general available Form Recognizer READ container, version 3.0. (If you want to use a “preview” version instead of the current general available version, replace

read-3.0with, for example,read-3.0-model-2023-10-31-preview) - Allocates 8 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 8 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 8) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

Command 3 (for AZDI ID Document model version 3.0):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 8 mcr.microsoft.com/azure-cognitive-services/form-recognizer/id-document-3.0 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the general available AI Document Intelligence ID Document container, version 3.0. (If you want to use a “preview” version instead of the current general available version, replace

id-document-3.0with, for example,id-document-3.0-model-2023-10-31-preview) - Allocates 8 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 8 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 8) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

Command 4 (for AZDI Receipt model version 3.0):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 8 mcr.microsoft.com/azure-cognitive-services/form-recognizer/receipt-3.0 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the general available AI Document Intelligence Receipt container, version 3.0. (If you want to use a “preview” version instead of the current general available version, replace

receipt-3.0with, for example,receipt-3.0-model-2023-10-31-preview) - Allocates 8 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 8 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 8) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

Command 5 (for AZDI Other Forms model version 3.0):

docker run -dit --restart unless-stopped -it -p 5000:5000 --memory 18g --cpus 8 mcr.microsoft.com/azure-cognitive-services/form-recognizer/document-3.0 Eula=accept Billing={ENDPOINT_URI} ApiKey={API_KEY}This command:

- Downloads and runs the general available AI Document Intelligence Other Forms container, version 3.0. (If you want to use a “preview” version instead of the current general available version, replace

document-3.0with, for example,document-3.0-model-2023-10-31-preview) - Allocates 8 CPU core and 18 gigabytes (GB) of memory. (= recommended specs by Microsoft)

- Exposes TCP port 5000 and allocates a pseudo-TTY for the container.

- Automatically restarts the container after it stops (e.g. after a system crash), unless it was manually stopped.

If your system has less or more than 8 CPU cores or 18 GB of memory, please adjust this number for the corresponding tags (--memory 18g and --cpus 8) accordingly.

As an example, we have allocated TCP port 5000, but you can change it to any available port. To change this port, please adjust this number for the corresponding tag (-p 5000:5000) accordingly.

After you run these commands, wait until it returns the confirmation message “Application started”:

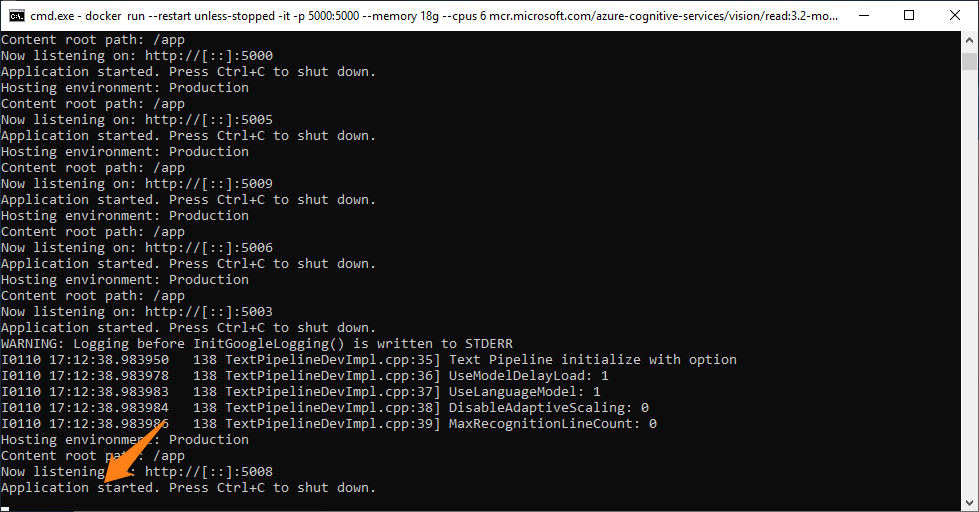

Step 2: you can choose to close the Command Prompt window or leave it open. The container will keep running either way.

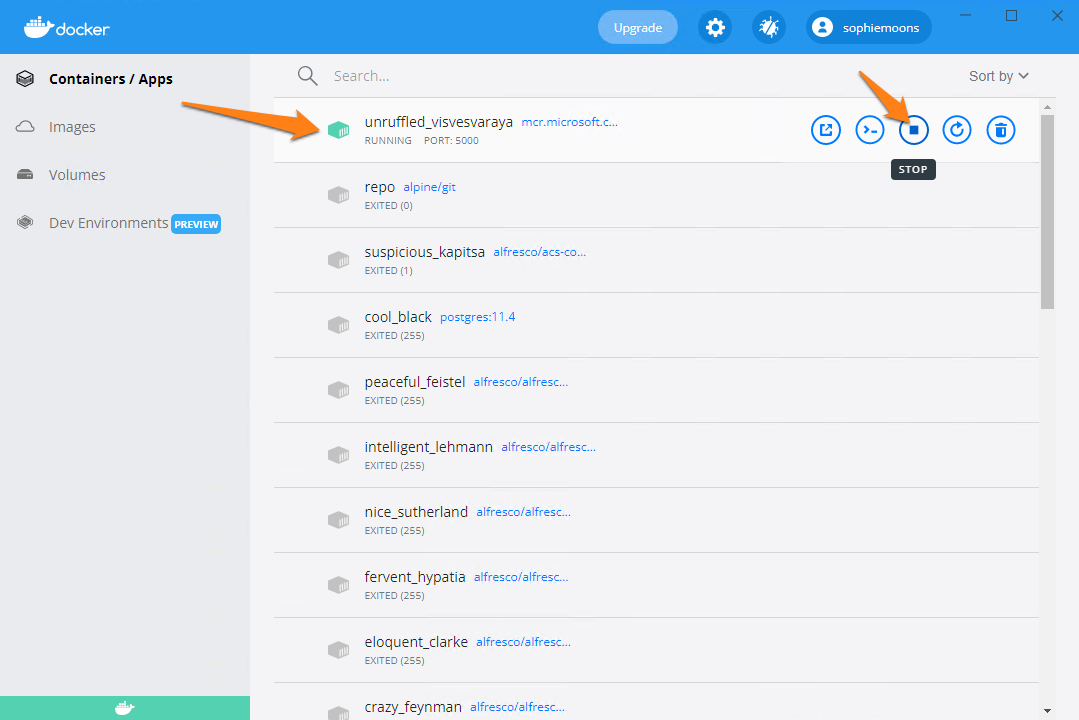

Step 3: you can always check, stop, restart or delete your running containers in your Docker desktop client under the “Container / Apps” tab.

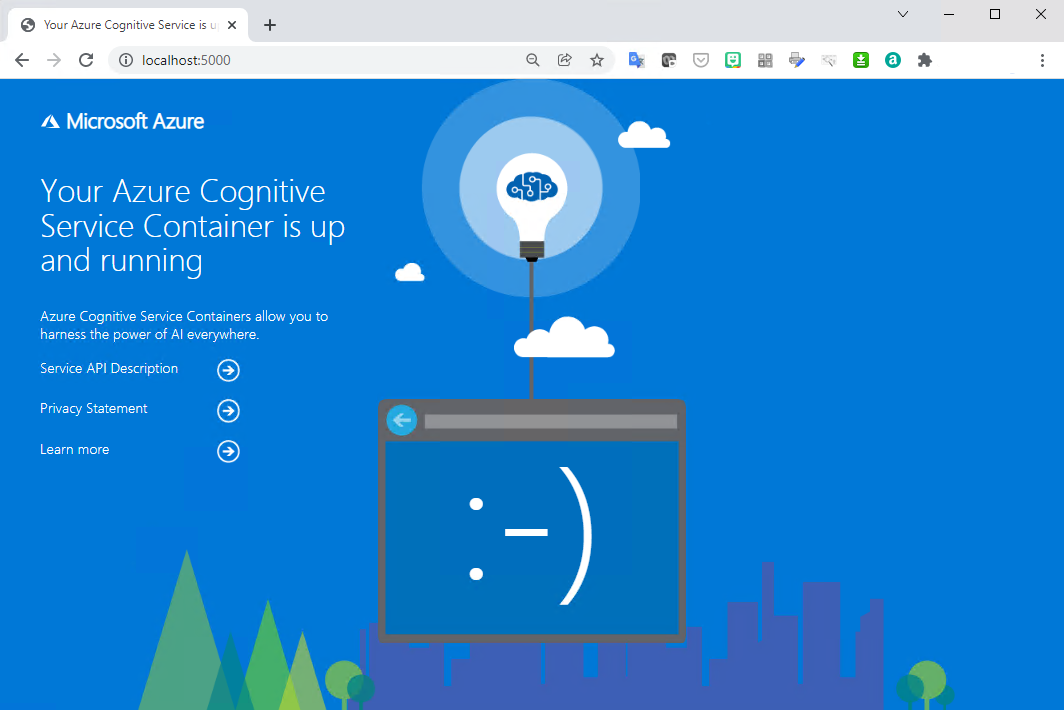

Step 4: as a final test, run your local URL in a browser. In our example, we allocated TCP port 5000, so we use the URL “http:\\localhost:5000”.

If everything went correctly, it should show you the following splash page:

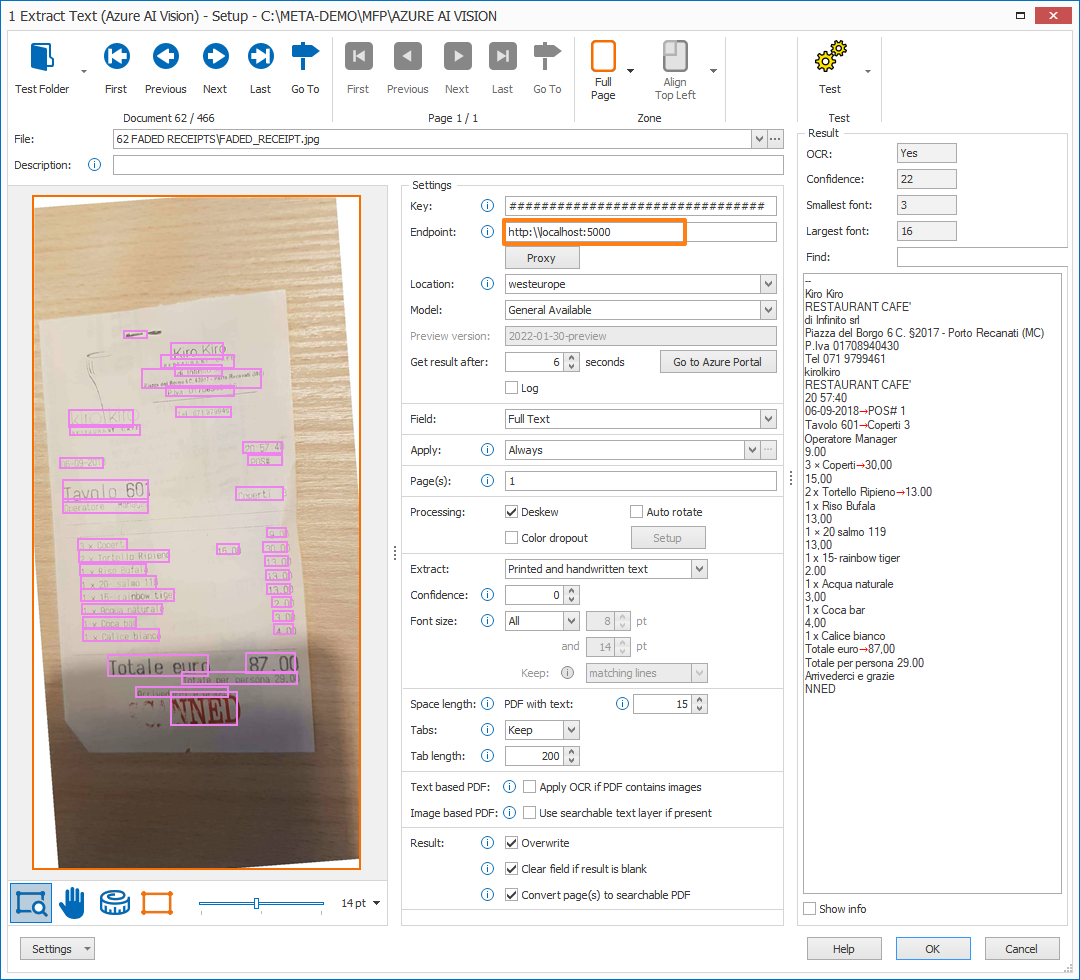

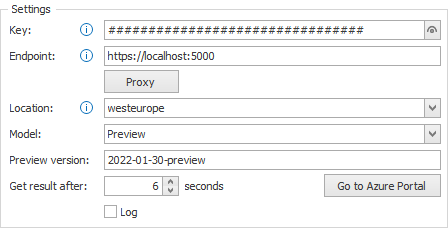

In your Extract Text (Azure AI Vision) rule:

Step 1: Enter the same API key you’ve used to set up your local Azure AI Vision engine.

Step 2: Enter the endpoint URL with the allocated port you’ve used to set up your local AZVI engine. In our example, we’ve used TCP port 5000, so our endpoint URL is:

https://localhost:5000

Step 3: Select the model you’ve used for your local AZVI engine. In our example, we’ve set up our container with the “3.2 2022-01-30-preview” model, so the settings for this are:

Model: Preview

Preview version: 2022-01-30-preview

If you set up your container with the general available model, you just need to select “General Available” as your Model. MetaServer automatically uses the latest model version, so you don’t need to specify it.

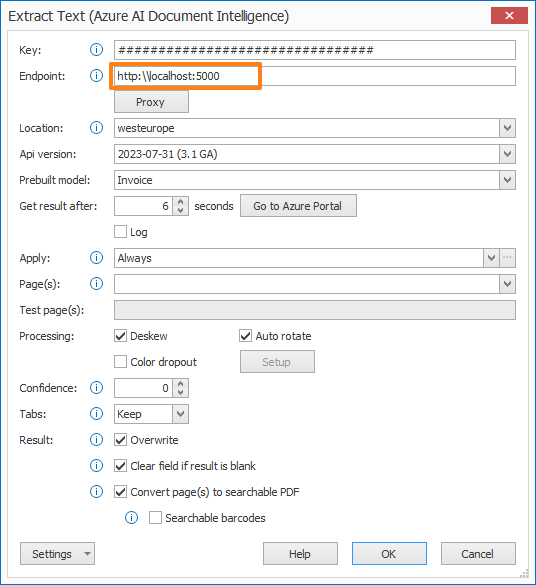

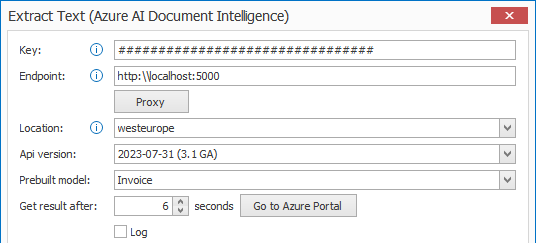

In your Extract Text (Azure AI Document Intelligence) rule:

Step 1: Enter the same API key you’ve used to set up your local Azure AI Document Intelligence engine.

Step 2: Enter the endpoint URL with the allocated port you’ve used to set up your local AZDI engine. In our example, we’ve used TCP port 5000, so our endpoint URL is:

https://localhost:5000

Step 3: Select the API version and model you’ve used for your local AZDI engine. In our example, we’ve set up our container with the “2023-07-31 (3.1 GA)” Api version and the “Invoice” model, so the settings for this are:

Api Version: 2023-07-31 (3.1 GA)

Prebuilt model: Invoice

If you set up your container with the preview Api version, you just need to select “2023-10-31 Preview” as your Api version.

If your server needs to be fully disconnected from the internet, disconnected containers will enable you to use Azure services completely offline.

You can find more information about this capability and how to request access to a disconnected container here:

https://learn.microsoft.com/en-us/azure/cognitive-services/containers/disconnected-containers